Measuring VaR? Are you sure?

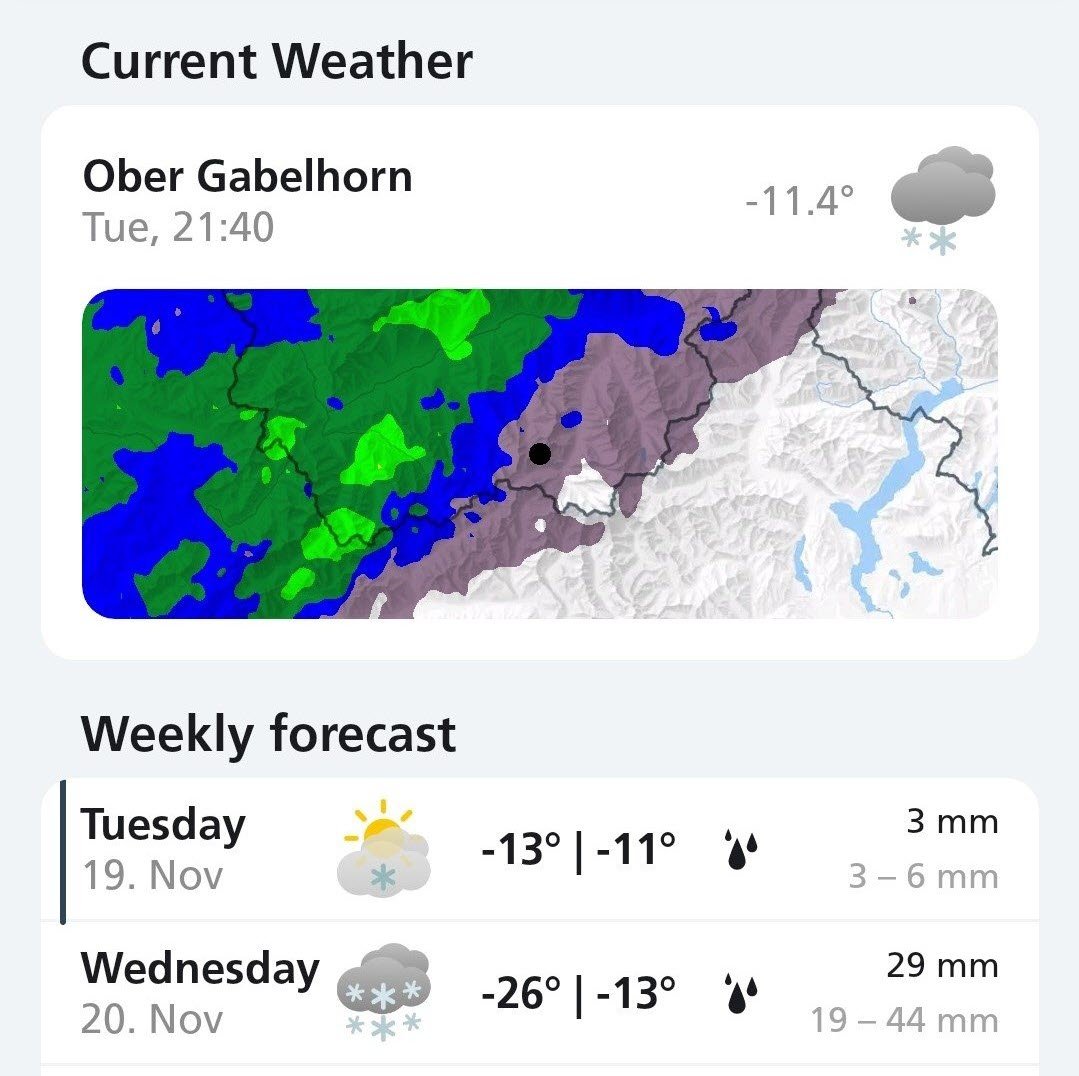

Imagine a world blind to the sky, where weather forecasts dictate reality. A world where truth is lost, and we're trapped in a cycle of manufactured weather.

(Photo source: MeteoSuisse.com)

Absurd, you say? Yet, for 30+ years, this is what we – risk managers – have all been doing: we've mistaken Value at Risk (VaR) forecasts for reality. We've confused model predictions with truth.

Despite being a widely used term in financial risk departments, textbooks, and regulations, "measuring VaR" is a misnomer. No one has ever truly measured the VaR of any portfolio. Instead, we rely on fallible models (parametric, historical, ML-based, and more) to forecast VaR, which is different from measuring. Models are frequently proven wrong as their underlying assumptions fail to keep pace with the ever-changing risk landscape. Despite this fact, these forecasts are taken at face value and used to manage the actual risk of financial institutions.

Reality checks for risk predictions are known as backtests. By comparing a series of predicted risks to their actual realized losses, backtests permit to assess the adequacy of risk models and identify underestimations of risk. VaR backtests are a common practice, introduced by Basel I in 1996.

Measuring VaR, however, would amount to observe it, a posteriori. But a notion of “realized VaR”, like “realized Variance”, doesn’t exist. Why?

As a recent paper shows, VaR is fundamentally unobservable. You can't simply look up at the sky and declare, "Today, my VaR has been $123,45," as you would with weather. The technical reason is that the VaR backtest (which is unique as shown in Corollary 3.9) lacks "sharpness" (see Definition 3.13, Remark 3.15, and Proposition 3.17). This means it doesn't estimate the discrepancy between predicted and actual risk, the latter remaining entirely unknown (Remark 3.18). In other words, the VaR backtest might indicate your model is flawed with high probability (a Basel regulatory "red zone"), but it doesn't quantify the difference between the predicted VaR and the true VaR, which could be as small as $1 or as large as $1 trillion; we'll never know.

For these reasons, a bank's VaR report should indicate "N/A" whenever a backtest fails for a specific portfolio, because in that case you’re clueless about true VaR. However, this is not what happens in typical cases. Backtests are often treated as a mere regulatory burden and are frequently skipped for internal control purposes. As a result, inaccurate VaR estimates are routinely published and relied upon.

Rivers of ink have been spilled (blame it on us!) over VaR’s lack of subadditivity (and thus, coherence) and its blindness to tail events, both of which raise significant concerns about its use in prudential risk management and regulation. Yet, a far more fundamental flaw of VaR has been overlooked for years which is its lack of observability.

Science, in any field, proceeds through experiments, in which model predictions are tested against real-world observations. Predicting unobservable quantities is not a scientific endeavor and has led to the current misconception of models as reality, that we see in the risk industry.

A natural question then arises: are there other risk measures that are observable instead? How about Expected Shortfall? Variance? That's a topic for another discussion. Stay tuned.